KNNOR: An oversampling technique for imbalanced datasets

Enhancing Minority Class Representation in Imbalanced Datasets with Advanced K-Nearest Neighbor Oversampling

Abstract.

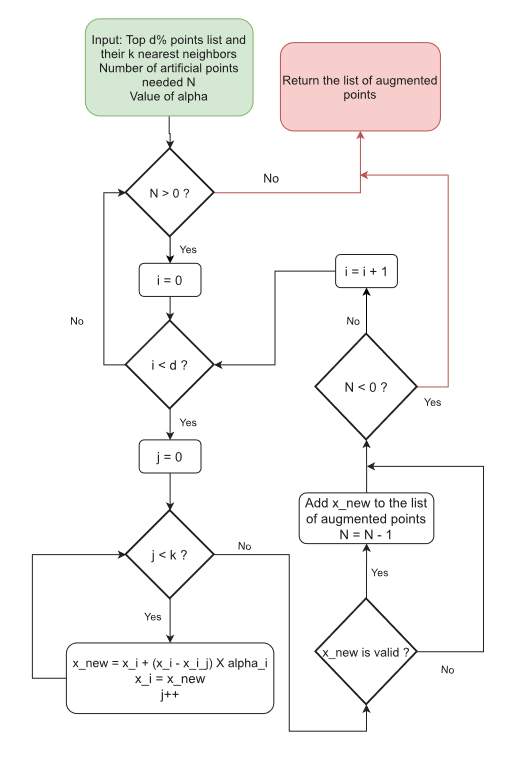

Imbalanced datasets significantly hinder ML model performance. Existing techniques like SMOTE and ADASYN struggle with ‘within class imbalance’ and the ‘small disjunct problem’. We propose K-Nearest Neighbor Oversampling (KNNOR), which sorts minority class points by distance to their \(k\)th nearest neighbor, generates synthetic points iteratively, and validates them using k-nearest neighbor classification. This method ensures reliable oversampling, avoiding noise amplification. For example, given minority data points \(\{x_1, x_2, x_3\}\), the new point \(x_{new}\) is calculated as \(x_{new} = x_i + \alpha \cdot (x_i - x_{near}) \text{ for } i \in \{1, 2, 3\}, \alpha \in [0, 1]\). Experimental results show that KNNOR ranks first across several datasets and classifiers, demonstrating superior performance compared to ten top oversamplers. The method is available as an open-source Python library.

Illustration of the proposed experimental design.